Reference Document: HW2 Data Mining.pdf

Association Analysis: Basic Concepts and Algorithms

CS273 Midterm Exam - Introduction to Machine Learning

Frequent, Closed , Maximal Itemsets - YouTube

FIM7: The Apriori Hash Tree for Fast Subset Computation - YouTube

Hash Tree Method to Count Support in Apriori Algorithm | Insert | subset(Ck, t) function - YouTube

Q1

-

Write down the expression for the MSE on our training set

-

Write down the gradient of the MSE

-

Give pseudocode for a (batch) gradient descent function theta = train(X,Y), including all necessary elements for it to work

= [a , b]

alpha = 0.01

stoping condition = 0.0001while (True) {

= - alpha

if abs( - ) < stoping condition {

break

}

=

}

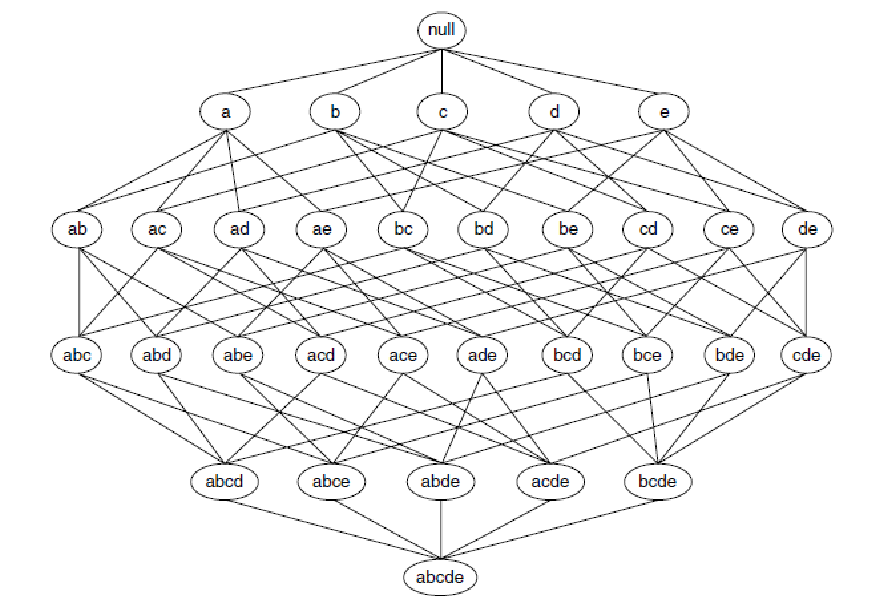

Q2

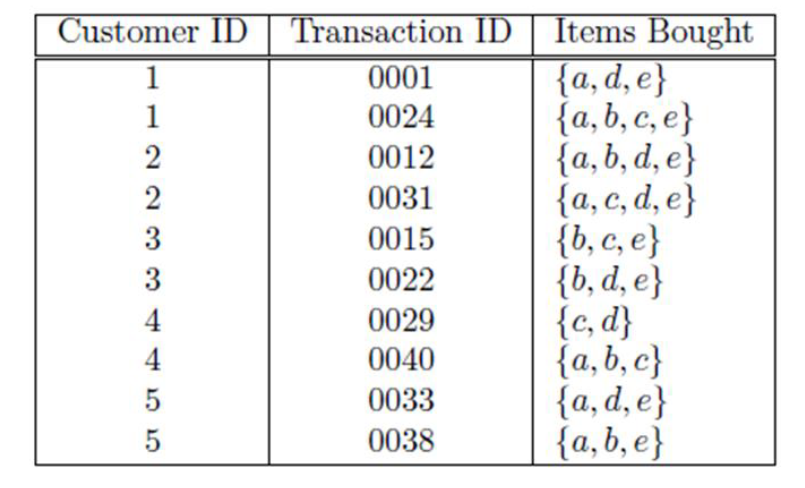

Compute the support for item-sets {e}, {b, d}, and {b, d, e} by treating each transaction ID as a market basket

Support() = = =

Support() = = =

Support() = = =

Q3

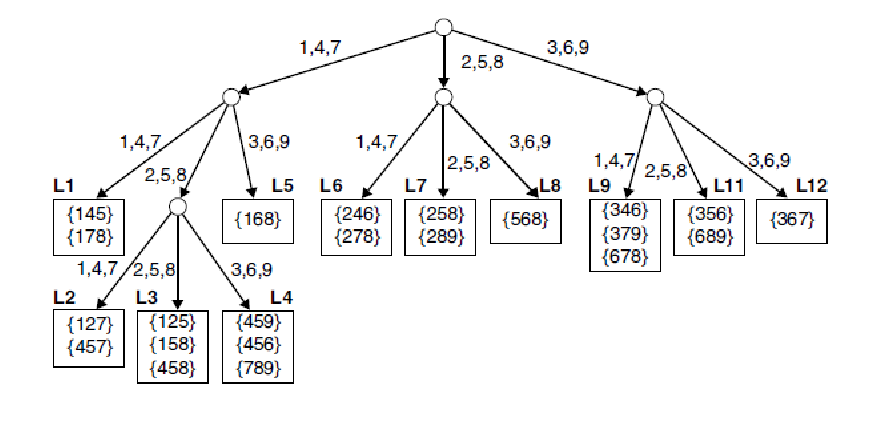

Hash Tree

Given a transaction that contains items {1, 3, 4, 5, 8}, which of the hash tree leaf nodes will be visited when finding the candidates of the transaction?

The leaf nodes that will be visited using the hash tree are L1, L3, L5, L9, and L11.

| Exploration Item | Leaf Nodes Stumbled Upon |

|---|---|

| 1 | L1, L3, L5 |

| 3 | L9, L11 |

| 4 | L3 |

| 5 | - |

| 8 | - |

Q4

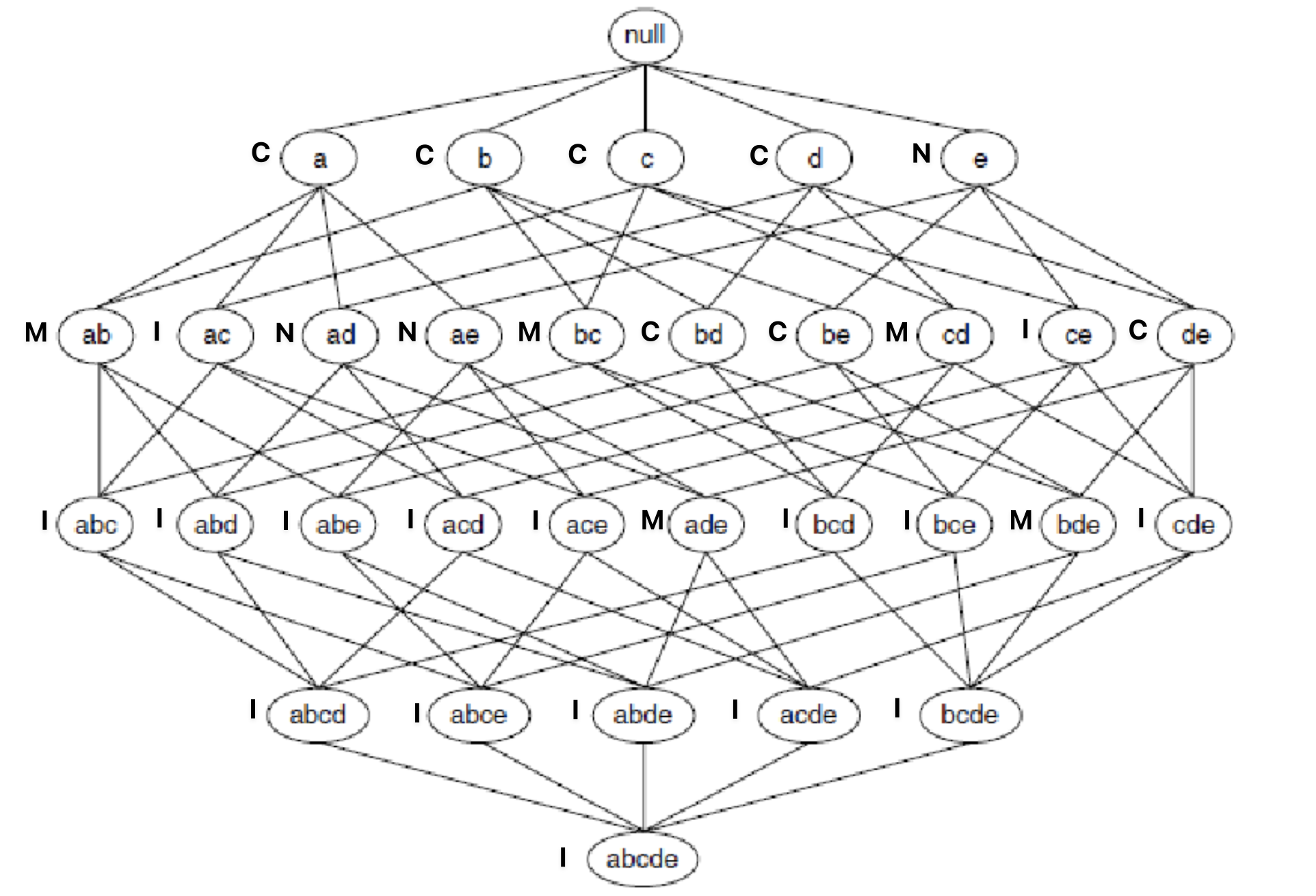

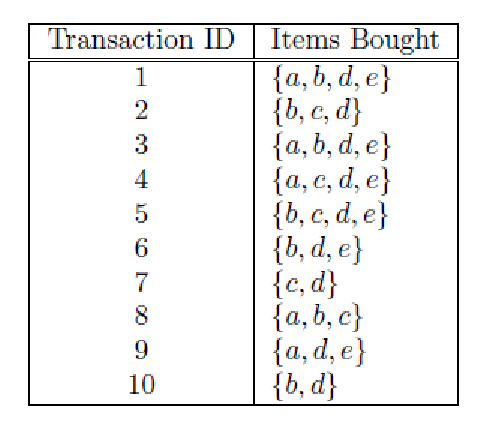

Given the lattice structure shown in the following figure and the transactions given in table label each node with the following letter(s):

- M if the node is a maximal frequent item-set

- C if it is a closed frequent item-set

- N if it is frequent but neither maximal nor closed

- I if it is infrequent

Assume that the support threshold is equal to 30%.

| 1 Item-set | Support |

|---|---|

| {a} | 5/10 |

| {b} | 7/10 |

| {c} | 5/10 |

| {d} | 9/10 |

| {e} | 6/10 |

| 2 Item-set | Support |

|---|---|

| {a, b} | 3/10 |

| {a, d} | 4/10 |

| {a, e} | 4/10 |

| {b, c} | 3/10 |

| {b, d} | 6/10 |

| {b, e} | 4/10 |

| {c, d} | 4/10 |

| {d, e} | 6/10 |

| 3 Item-set | Support |

|---|---|

| {a, d, e} | 4/10 |

| {b, d, e} | 3/10 |

| 4 Item-set | Support |

|---|---|

| - | - |